Editor’s note: The VISION Conference 2022 will feature expanded exploration of where innovation is rapidly driving change, including trends in high-value specialty crops, sustainability and carbon sequestration programs, and high-tech controlled environment production systems. One emerging topic on the agenda is visual data. Below is an inside look at how visual sensors and computer vision will be crucial to help the entire industry meet the food demands of a growing global population.

Computer vision has experienced a real boom. Insights gathered through data from drones, satellites, and planes gather data from the sky. Equipment-mounted sensors are able to measure changes in plant characteristics or soil parameters with optical reflectance sensing. LiDAR sensors are now able to measure the structure of plants in 3D.

Beyond helping agronomists with data, computer vision is also at the core of enabling autonomous machines in the field, helping machinery react to situations on the field or even detect obstacles. Technology even enables us to react to hyper-accurate location data from satellite imagery, which is able to bring centimeter-level detail. With all this technology at our disposal, are human eyeballs even needed?

Once these cameras, sensors, and satellites are deployed at scale in fields and greenhouses, they will provide 100% surveillance coverage round the clock. When this happens, remote agronomy and to a large extent remote agriculture could become a reality. As autonomous machines and robots take on an increasing number of roles, the necessity for a large workforce may be no longer needed. While today most fruit and vegetables are picked and packed by hand, a report from S&P Global forecasts that by 2025, perception systems and picking algorithms will enable aspects of autonomous harvesting in controlled environment agriculture (CEA).

This boom in computer vision isn’t only relevant in agriculture. In fact, as the most mature field in modern AI, it is permeating every sector of the economy. The opportunities that automating visual capabilities bring endless market opportunities across every sector. As humans, vision is our most developed sense — the one which we use most to perceive the world around us. Professor of Medical Optics David Williams explains that “More than 50 percent of the cortex, the surface of the brain, is devoted to processing visual information.”

It is no coincidence that the part of the human brain responsible for the analysis of visual information is the largest from that of other senses. Artificial neural networks are an essential part of machine learning and the backbone of modern visual technologies. In the words of Professor Williams, “Understanding how vision works may be a key to understanding how the brain as a whole works.”

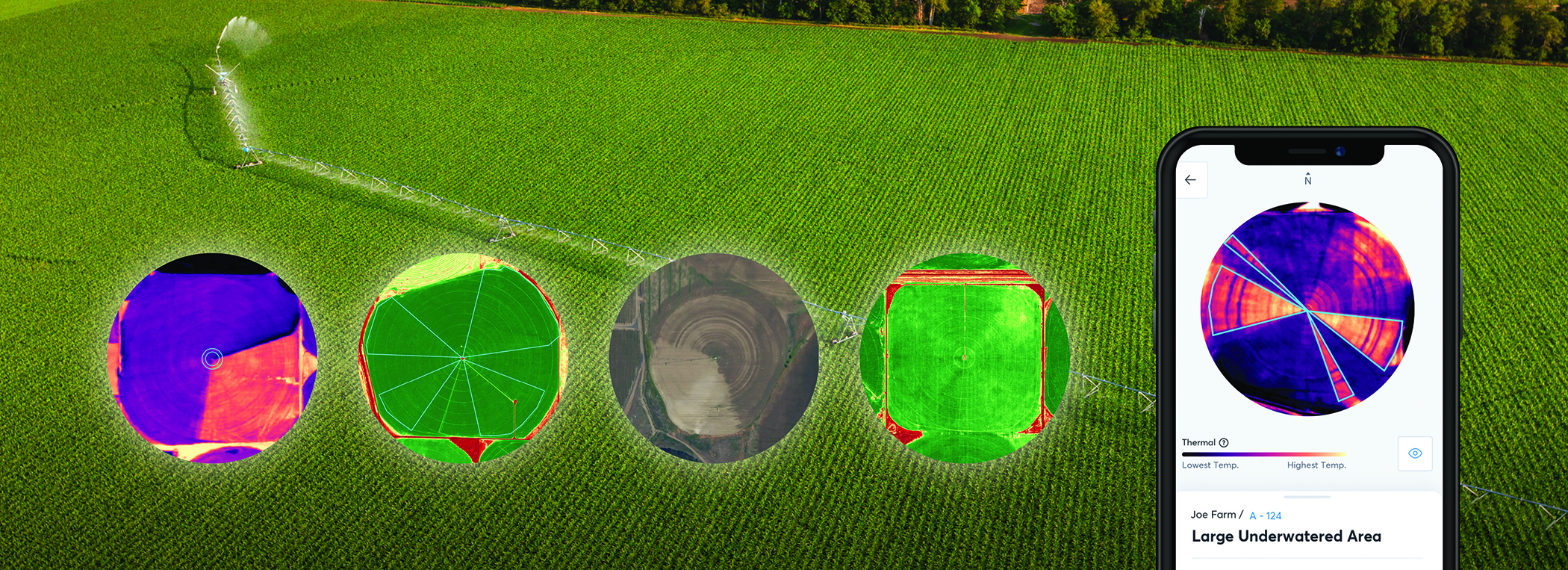

Visual technologies are already powering developments in food and agriculture that will change the way the world grows, manufactures, transports, and consumes food. Computer vision is arguably the most technologically advanced field when it comes to AI. This unprecedented wealth of visual data can be harnessed and processed through machine learning and then fed back to food growers or autonomous machines such as irrigation pivots. Even after the harvest, computer vision provides technology which is already being used for key crucial tasks such as the process of sorting and grading fruit and vegetables, a task which when done by humans is inconsistent, time consuming, variable, and expensive.

The impact of this technology is huge. Visual sensors and computer vision will be crucial to help the entire industry meet the food demands of a growing global population. World Bank data suggest that by 2025, the majority of the food and agriculture sectors will be deeply impacted by the adoption of visual technologies, such as image recognition, cameras, robotics, and much more. It is no surprise that computer vision and AI technologies are at the heart of a new wave of promising tech startups across many verticals including retail, construction, insurance, security, and agriculture.

Improving Existing Processes as a Starting Point to Start a Revolution

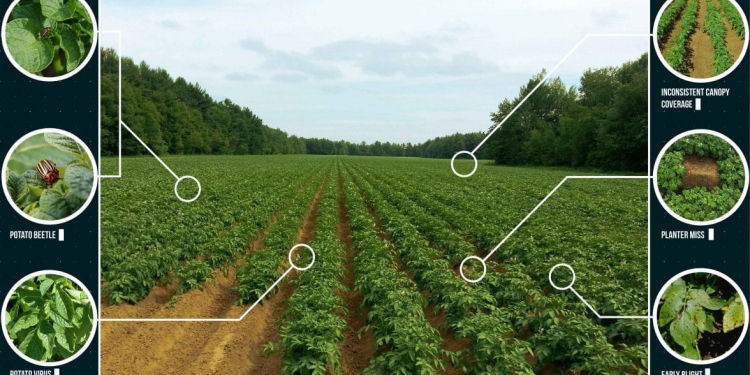

There is a plethora of visual technologies available to food growers. This includes any device or tool that captures, analyzes, filters, displays, or distributes visual data. These systems are designed to leverage computer vision, machine learning, or artificial intelligence to make sense of all the visual data, and deliver either actionable insights or autonomously act upon them.

A recent report from LDV Capital on Visual Technologies highlights some key future-facing trends that will stem from the adoption of visual technologies among food growers over the next five years. The most interesting thing about these, is that they mostly emphasize the improvement and adoption of existing technologies. It won’t be a revolution but a progressive evolution as visual technologies become mainstream. For example, the report points to machine learning algorithms ingesting drone, plane, and satellite images of increased resolution and greater spectral range, further enabling remote agronomy. Also, as processing speeds increase, equipment-mounted sensing will enable plant-level decisions such as precision weed spraying and seed placement.

Can Every Existing Process be Automated and Managed Remotely?

With so many “eyes” monitoring and assessing plants 24/7, and visual technologies that extensively cover entire fields or greenhouses, can farming and agronomy be managed remotely in the near future? From experience with our customers, I know that a lot of food growers already need to make much fewer trips to the field thanks to insights or imagery taken by machines and delivered to them. What is more, their ability to tackle problems such as pests is more targeted and precise. Instead of doing routine spot-checks, these devices are able to monitor 100% of their crops, 100% of the time.

While computer vision is a major breakthrough which will redefine the way in which food is grown and processed, it isn’t the end all. Other complementary technologies are needed to enable us to see under the leaf and under the soil, which are just as crucial to get the full picture. For example, monitoring and analyzing the microbiome through dedicated sensors that measure abundance, diversity, and colonization of microorganisms in above and below-ground plant organs.

Collecting, integrating, and making sense of all this data will be a key challenge to harness the power of the growing technology stack in which food growers will rely upon. Food growers have always relied upon hundreds of signals from the field, but these emerging tools and platforms mean that they will need to orchestrate insights from a growing number of sources. The ultimate goal is to create a unified system that brings the full, clear picture needed to enable better high-level agronomic decisions.